AI-BASED MEAL LOGGING

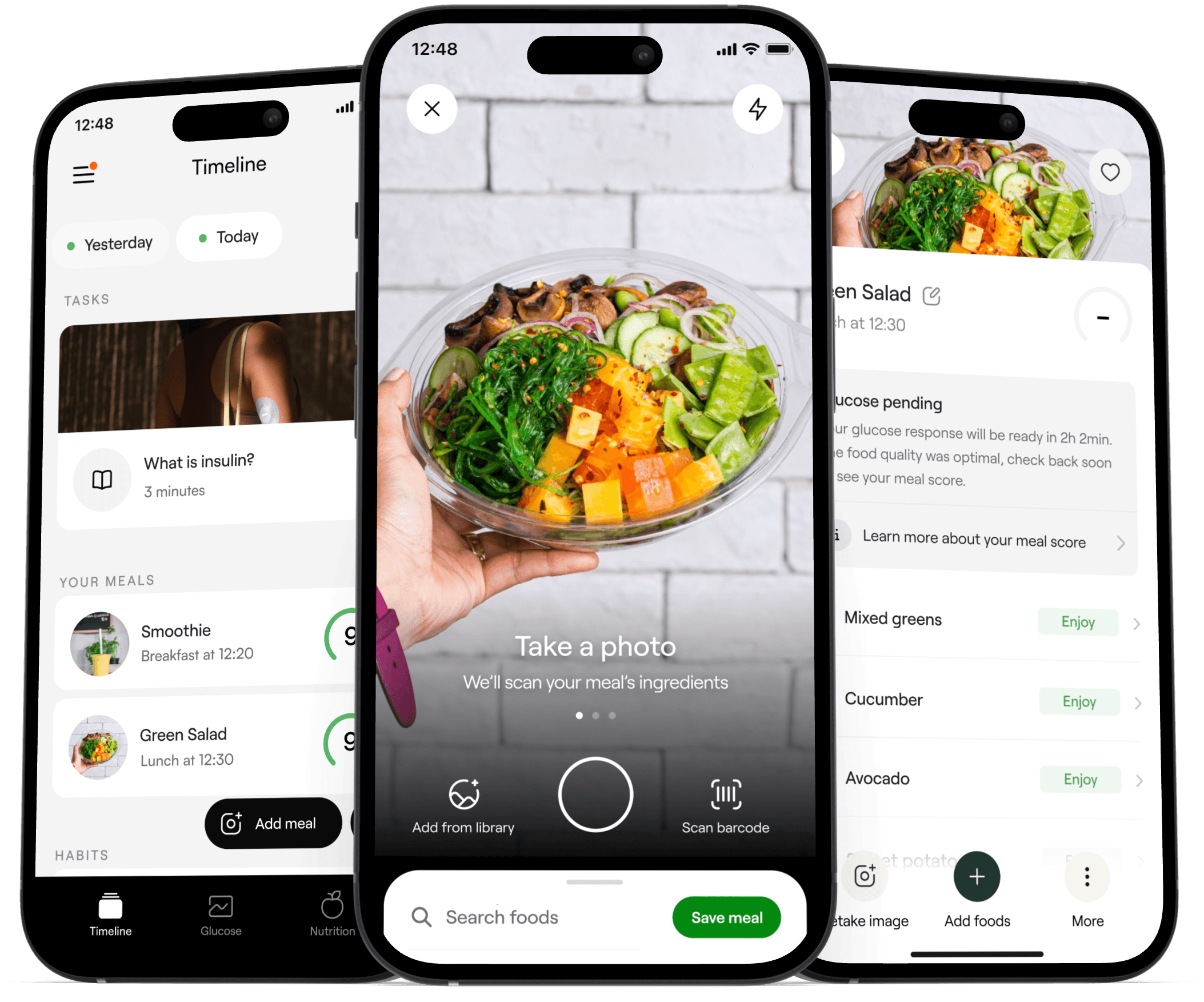

Veri is a personalized metabolic health program, heavily relying on meal logging. The goal of the project was to improve key feature of the app - meal logging, and make it fast, fun, and useful experience for users.

From explorations to final designs in 5 weeks while working with multiple projects at the same time

Veri app focuses heavily on meal logging, and therefore making the meal logging experience as seamless as possible is important for the overall experience of the product.

Traditional meal logging is usually based on manual text input which requires time and energy, and can become an annoying task to complete multiple times during the day.

See an example of the old manual meal logging from here: https://tonjrv.com/old-home

Over 60% of the meals logged in the Veri app had only 0-1 foods logged inside them.

Note: Meal = Breakfast, lunch, dinner etc., Food = Food item like drink or ingredient added to meal.

The longer users are using the app the less foods they are adding to their meals.

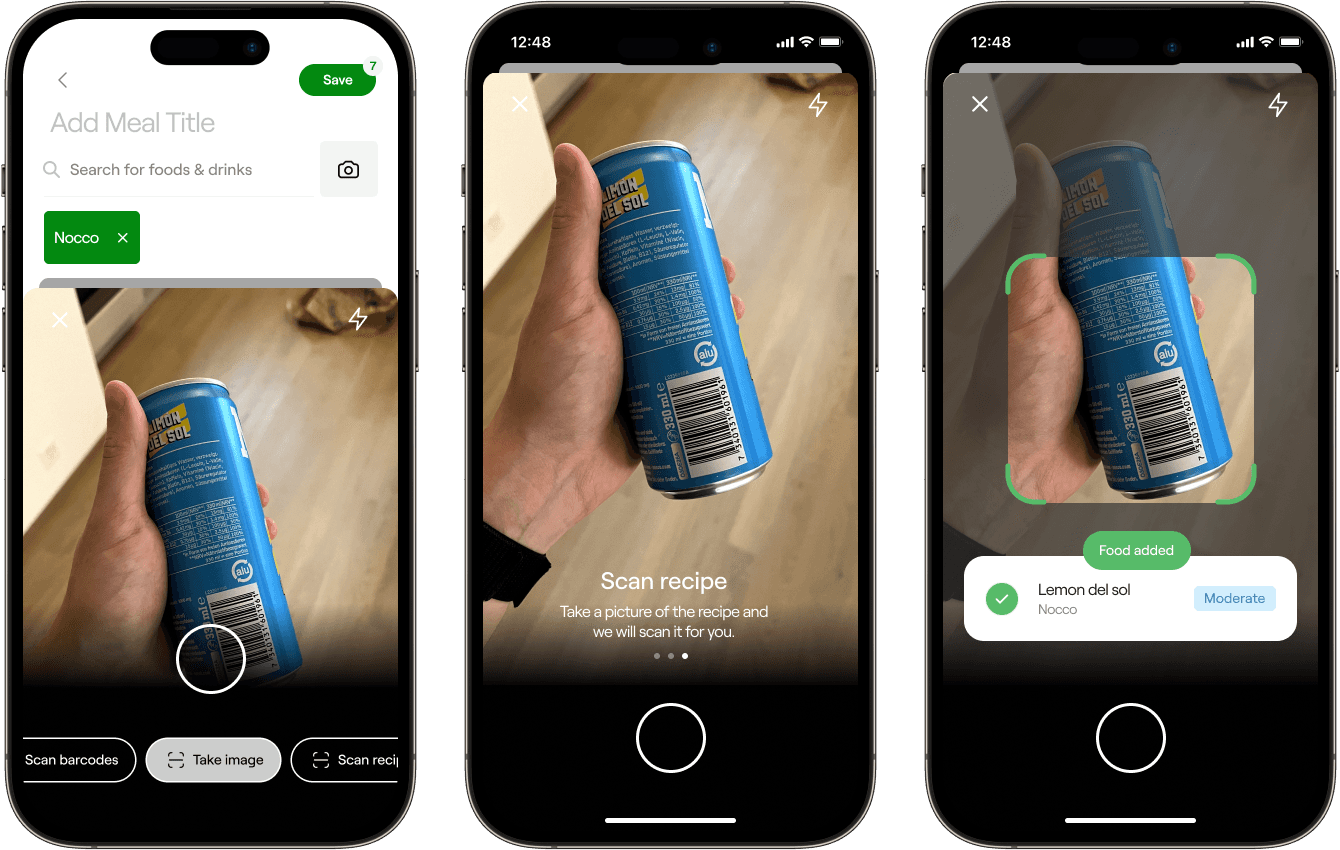

Our team had developed a prototype of an AI-based image detection model that seemed to be working well in detecting the ingredients from the meal.

I started to experiment with it and created few example concepts that got people excited, which led to a completely new project being added into our app roadmap.

As a lead designer of the project my responsibilities were to be responsible for maintaining the design process from the start to the final product, including discovery & definement, ideation & concepting, evaluation & iteration, and supporting developers with implementation.

I also created Rive animations that are used to indicate when the AI is analyzing meal information.

It was clear that faster solutions were desired since the complexity of meal logging was one of the main feedback received from our users.

Based on the early discussions, and ideation sessions I came up with a main concept focusing on meal logging with camera.

Early explorations on what the meal logging could be with camera

Faster meal logging experience was loved, but more communication after taking the image was requested by users.

Internal and external testing rounds

Internal testing phase

Canary release

We released the program first for the small patch of our users to validate it with actual usage, and to see if there are anything to be fixed before rolling it out for all users.

Ensure that old manual logging flow is easy to access

When we did canary release for the feature, we noticed that some of the old users were confused since they didn't find the old manual logging easily. We then made sure that old manual flow is exactly the same as before if you prefer using it. This solved all the feedback received, and we got a lot of positive feedback from these customers.

Based on the above steps, I finalized the designs for developer handoff, and also created interactive animations with Rive.

Figma file with designs

Rive animations

Assisting developers with implementation

Final flow for logging meals:

Just open the camera & take the photo

AI-based meal logging was launched early 2024 and it has been received very well. People are praising it for how great and easy it is to use, and how it really makes the key part of the app better than it was before.

Increased overall satisfaction

App's CSAT score has improved ever since the introduction of AI-logging. We have received a lot of positive feedback around it.

And our Trustpilot rating is increasing solidly.

Drastically faster meal logging

Looking at the usage data, the time it takes to log meals has decreased drastically after the launch. Almost 50% of the meals are now logged in less than 10 seconds.

However, still some of the users prefers the traditional text-based meal logging.

Still around 40% of the users opt-in to use traditional logging instead of AI-logging

There seems to be many reasons ranging from privacy fears, precision issues, to not willing to take photos of meals. But we support both use cases so the ones who want more precise logging can still log manually.